Reciprocal Merge

April 01, 2025

Project Status: Active

Tech Stack: Typescript, React, Tauri, AWS (Lambda + API gateway)

Ever since she started her most recent job, my partner had begun to identify systems and processes that just didn't make sense. One of them, something that she was expected to do a few times a week, required her to walk through the exact same steps of manual look up, copy from one sheet to another, do some math, etc. When she asked why it wasn't automated or suggested ways to change the process that would significantly reduce effort, she was met with blank faces and disregarded.

When she mentioned the problem to me and asked if there was any way we could automate things, I was in the thick of things with work (and in a pretty bad place mentally overall). I was able to clobber together a basic document merge engine, but I couldn't go much further than that. While I would have loved to help out and work through it, I just didn't have the time or energy to get to it. So up it went on the "possible projects" shelf.

Fast forward about a year and I found myself out of work and into sabbatical life. I was feeling rejuvenated and ready to play around with something, and that's when I remembered the automation tool. So we sat down and walked through the process, going over what information comes from which places, what kind of math or copy/pasting had to be done. After a few days, we'd outlined the bulk of what needed to be done, and I got to work.

For reference, the completed project can be found on github in two pieces:

- The main app: https://github.com/fuzzylimes/reciprocal-merge

- The proxy: https://github.com/fuzzylimes/reciprocal-merge-proxy

- The app: https://fuzzylimes.github.io/reciprocal-merge/

The Initial Problem

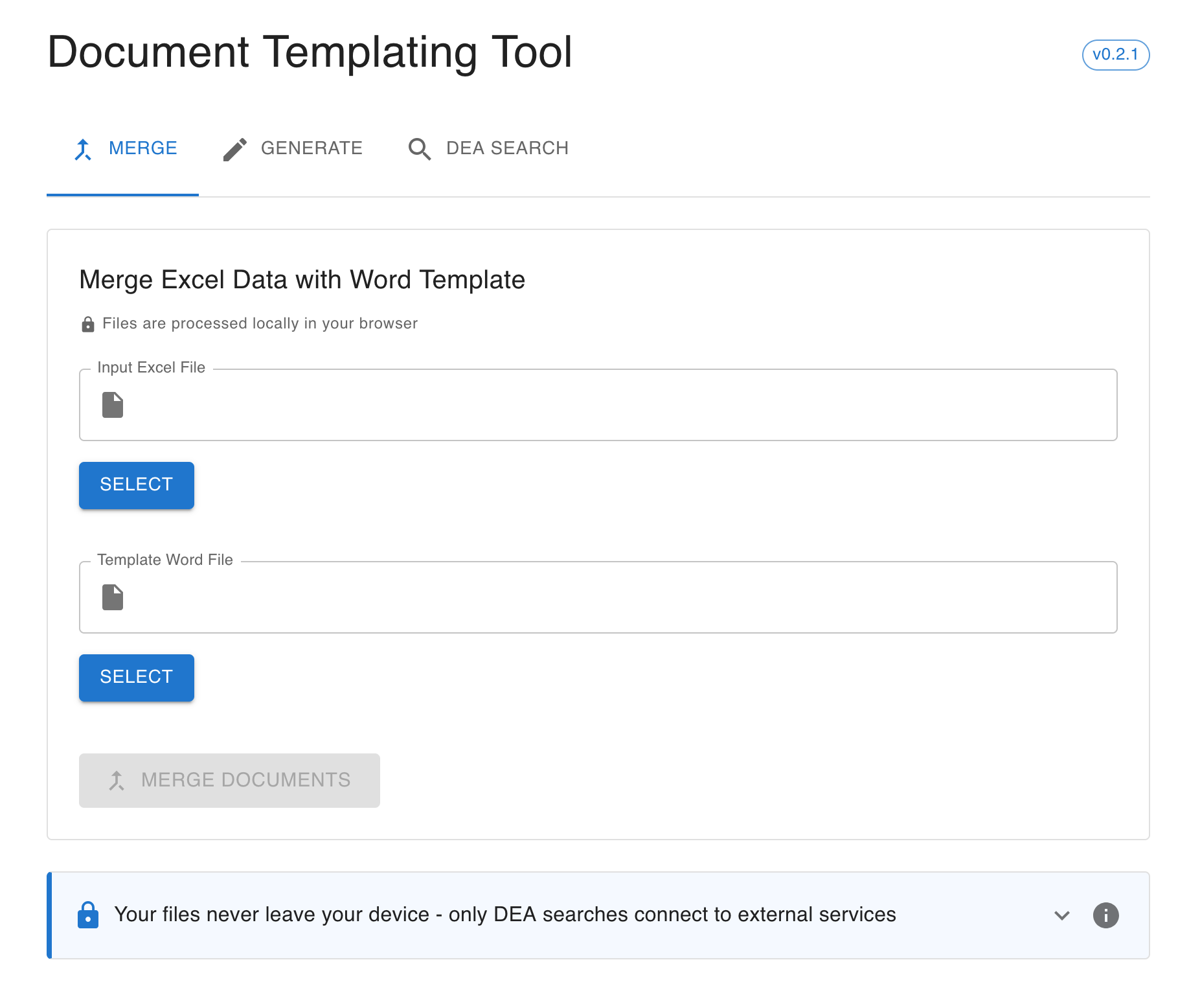

The task to be automated involved collecting a bunch of data from multiple sources, doing some math on some data, and then conditionally filling out a Word doc with this data. As mentioned, I'd already created a basic merge tool, which allowed her to create a Word template (not even this was being used for this process...), an Excel file with her data that she hand copied/calculated, and finally merge into a Word doc. While this worked, there was far too much manual work that could lead to human error.

The next logical step was to automate the creation of the Excel file that she was manually building. By allowing her to "upload" the source documents, we could write an application that would take in the sources, do the stuff she was doing by hand, and spit out the Excel file for validation. Then once that was verified, it could be passed off into the merge tool to generate the final document.

At this stage we had two requirements:

- Make the app capable of generating the Excel file

- Make the app capable of generating the Word doc

Initial Planning

I started by laying out the items that I knew for certain:

- I would use web technology (React) to build an app

- I wanted the app to handle all of the data manipulation using common libraries like

xlsxanddocxtemplater - Everything happens in the app - no network calls, no server functions

- I wanted the app to be built into a standalone application, not run in browser

My thinking for the 3rd option above was that I didn't want the user to be "uploading" files in the browser. I figured that if there was some kind of security software, it might flag this activity as uploading docs to some unprotected site. So to bypass that concern, it would be a desktop application, with networking disabled, running completely isolated.

I had done something similar for the document merge engine, which had been an electron app rather than a web app. However, rather than generating some giant 200MB package using Electron, this time I opted to give Tauri v2 a try. The last time I'd played with Tauri was in the early v1 days, but I remembered all of the security features it had baked into it like the network control.

The rest of the logic needed to handle all of the various transformations and extractions was specific to my partner's job, so there's no point in mentioning any of those specifics here.

Phase 1

The tool itself was going to be quite involved, required to create many different data sets, so I wanted to pare things back to start with. The goal was to get a small subset working, build the executable, and make sure that everything would work fine on my partner's work computer. And since it was already completed, include the merge app into the same package - why not.

Working together we identified a small subset of data points that would be the simplest to pull in for the needed generation, and I worked on banging something out. In this phase I was not concerned about code structure or organization at all. Making things pretty would come later once I knew that things would work. I find one of the most distracting things about development to be that little voice of doubt in the back of my head that starts asking useless questions like "Are you sure you want to do it that way?" or "You're repeating some code here, you could do this cleaner." While it means well, this voice is just a distracting asshole.

The initial phase ended up looking something like this:

- Generating the Tauri project and setting things up (i.e. everything outlined in this section of the readme)

- Deciding that each "tool" would live on its own tab, and how to handle that navigation (simple switch, no fancy router)

- Bringing over the code for handling the existing merge functionality

- Adding a tab for the "Generation" app, that will prompt for a specific set of files

- Logic to handle the reading and manipulation of those files

- Logic to construct data set to be exported

- Logic to export that data as an Excel doc

- Automated github action that would handle the building of the Tauri app and publishing the generated binaries (handled by tarui-actions)

Reading over that now, I guess it was a bit ambitious for a phase one, but each of them really were necessary. I needed to ensure Tauri would have access to read files off the computer, that I could then handle that data inside of the UI code, and then write a file out to the computer. Really the only things I could have skipped would have been for the new Generation code, but it would need to get done eventually.

Phase 1 Results

All of this was pretty painless to get working. Probably the most time was spent fiddling around with github actions to get the build working, since it takes a long (long) time to build (10+ minutes on mac, 15+ minutes on windows). That is by far the biggest downside to Tauri over something like electron. Electron apps may be bloated, but they build super fast.

The github action is set up to spit out an exe or msi, so we downloaded and installed it on my partner's work computer. Immediately we got a notification from CrowdStrike asking wtf we were doing, but we ignored it and pressed on to testing. It started up just fine, navigation worked fine, file upload worked great. Everything seemed to be golden. I was ready to move forward with the real meat and potatoes.

That was until a Team's message popped up from someone with the title of "Director of IT." Of course the CrowdStrike notification went back to them. Of course they were concerned about it. So they started to ask if she had installed any software that would have caused the alert to pop up. We debated over keeping it hidden but ultimately decided the best action was to be up front. We explained the app, linked them to the github repo for what I was doing, and I sent them a message myself explaining details of the tech, how it worked, and offered to answer any questions. The response was lukewarm to say the least, and mentioned they'd be by her desk tomorrow to talk.

Long story short: company policy stated that no external applications could be downloaded to the computer. Fair enough. Thankfully they were very cool about it, and no kind of disciplinary actions came from it (I did not sleep well that night). However, it did mean that my "app first" approach went out the window.

It's worth noting that the Electron app that I built for her before that only handled the merging did NOT flag CroudSource. Maybe it was the installation versus running an exe? Not sure. Just thought it was interesting.

Phase 2

Well, if it can't run inside of Tauri, at least it's still a web app. And if my partner can't install anything, then I guess she'll have to do it in the browser.

Thus began the refactor process of updating the code base to support both a Tauri app as well as a standalone web app. The idea was to simply host the tool using github pages, that way I wouldn't have to worry about deploying anything anywhere, and the build/deployment could be controlled easily enough with github actions.

The main challenge here was figuring out how to support both Tauri and the web for dealing with files. Tauri exposes a lot of APIs that make it easy enough. The web was not built to act like a desktop app. So a new system service needed to be created to provide a bridge. Thankfully Tauri provides a utility function to check if the app is running inside of a Tauri window, so that could be used to branch to the correct file handling logic.

The next challenge was blocking network calls. Like I mentioned before, the entire point of using something like Tauri is that I had fine grain control over what permissions the app had access to (like network). The browser is literally designed to make network calls. So I needed to come up with a hack to disable those calls. So with the help of Claude, we came up with this nightmare. It's a hack, there's no other way to describe it. Did this need to be created? Probably not. Realistically, there should have been no network calls being made given what was in the code, but I really, REALLY, didn't want to risk some rogue package sending data off somewhere. So it was added.

At first all network calls were blocked, but later on this had to be updated to include an

allowedDomainsvalue to support one specific network call.

With that complete, there were a handful of other minor changes needed to support running on the web. I also added a new github action to handle the build and deployment of the app.

Phase 2 Results

No surprised, the app worked just fine. No security warnings, no compatibility issues - just worked.

Now that we'd confirmed that the basic setup was going to work, I could get started on the actual guts of the app.

Phase 3

I'm not going to bore you with the technical details here. This phase was a lot of testing and refinement. I was still focused on building out exactly what was needed to solve the problem, so everything was purpose built. No clean or pretty code, just trying to get things working. This was when I had the first real "Aha" moment, thinking "The customer (my partner) doesn't give a shit how the code looks. All they care about is whether or not it solves their problem and is easy to use." I feel like it's really easy as a developer to get hung up on the "right way" to do something (like I said, that inner voice is an asshole), that sometimes we lose sight of the actual goal.

Iterate, test, refine. Repeat. That was this phase. It was by far the longest phase, but by the end, the tool was completing about 90% of the work that would normally take days in about 10 seconds. Needless to say, my customer was very satisfied.

Phase 4

With a working tool in place, and my partner successfully using it to do her job, I could finally step back and look at the giant mess of spaghetti code in front of me. Usually I view refactoring and refinement as a necessary evil, but this time I tried to approach it as a puzzle. I knew that things were ugly, with duplicated code all over the place, purpose built functions instead of generics, and no real sense of "flow" with the tool itself.

Some extra context might be helpful here. The merge tool allows the user to reference variables from different sheets within an Excel file. The way that my partner organized the data was across many different sheets (~25). This "Generator" part of the tool was responsible for building the Excel file with each of those sheets populated. Up until this point, those sheets were more or less handled individually. There was no system in place, just raw function calls to build out what was needed.

The biggest change of the entire factor was the creation of the TemplateGenerator class. Before this point, managing all of the files and data used in generating the output Excel file was... bad. Global variables, random values being tracked in some cases; to be honest, I've blocked a lot of it out of my mind. This TemplateGenerator class brought all of that together, providing a way to load in each of the needed files, "import" their data, manipulate that data, and make that data accessible to other parts that need it.

This was a massive undertaking, as it required full data modeling of what was being read in, as well as data modeling of in-between translations. While I knew what I had was working, there was a lot of "magic" that needed to be translated over into the type system.

Once it was done, there was far less magic than what I started with. There was a flow that could be followed without having to hop all over the code base. While I still don't think it's perfect, it's definitely clean enough in order to maintain moving forward.

Phase 5

With the refactoring completed and deployed (and some new bugs popping up), my partner brought me a new feature request. As part of the Generation app, we were identifying when specific Ids were being referenced in a data set that didn't exist inside of the "DataBase" Excel file. When that happened, she needed to manually go look up these Ids, populate the "DataBase" file, and then run again. She asked that I come up with a way to automate this task.

First step was understanding what she was doing to collect the data for each of the Ids. Her company used some 3rd party tool that required login in order to search, so we started by understanding how that was done, researching into a possible API, and seeing if we could replicate the calls ourselves using the browser console. In the end, we were going to need to simulate a browser request with some credentials, and then parse the output HTML. Not great, but not terrible.

When it came to implementation, my first instinct was to have this new "Search" tool take in the DB Excel file and automatically append the new data on to the end of it. What I found is that the xlsx library that I was using (the free version) was quite limited when it came to replicating something that already existed. No matter what I tried, I could not find a way to keep the styling and formulas in place while appending data to the end of the doc, so I decided to pivot to a more manual solution.

The solution was to generate a table in the tool itself that could be copy/pasted into the DB file. While not perfect (the DB file has to be closed before it can be uploaded...), it allowed me to move forward with delivering a feature that would save hours of time. After talking with my partner, we both decided that 10 seconds of copy paste was better than the alternative of doing nothing at all.

So I got to work, building out the logic to handle making the request to the site and parsing the response back. Thankfully the site used consistent element tags, so the parser wasn't too terrible to write.

Then I deployed and tested locally and... CORS errors. Of course.

In my eagerness to knock out the feature, I'd failed to consider the fact that I wouldn't be able to make the call to the 3rd party tool from within my site. All of the requests were rightfully denied: my origin did not match. I was indeed doing cross origin requests.

So it was time to build a proxy. Super simple, super stupid. Pass in the id to be searched and the auth token, return the page data. That's all it does, and my CORS issues were over.

Phase 5 Results

The search tool worked a charm. The copy/pasting was hardly an inconvenience. At this point nearly 98% of the entire process was automated, with the remaining 2% not being worth the effort.

The only issue that's come up has been regarding timeouts. It appears that the 3rd party site can be incredibly slow sometimes (>30 seconds), resulting in query timeouts. While I've created a bug to address the issue, the work around in the meantime is to simply run the query again. It's not a big deal.

Takeaways

I was really pleased with how much the Tauri ecosystem has grown. While the plugin system was a bit confusing at first, they've done a fantastic job making it easy to grant access as needed using single commands that install everything you need. It feels like a real product now, so I'm not surprised that I see more and more people advocating for it online. It's just a shame that my implementation with it isn't being used.

This was the first time I worked on a project with someone that was outside of the tech industry. While my partner has a good grasp of tech and understands what's going on with the created app, there was a language barrier when it came to discussing the various data flows. I've never been in a "contractor" position before, but I imagine this experience was very similar to what they go through. I love my partner to death, but our brains work very differently, so it took an extra level of patience when we discovered missed scenarios or requirements.

It really feels good to build something that you know will have an impact, especially when you can see that impact every day. Perhaps I've become jaded over the years, but most of the time work feels like building the wrong things, throwing it into the void, and never hearing anything back. A small-ish project like this was great in helping me remember that the software process doesn't have to be that way. At the end of the day, we should (hopefully) be building software to improve someone else's job and hopefully not creating more problems that need more solutions.